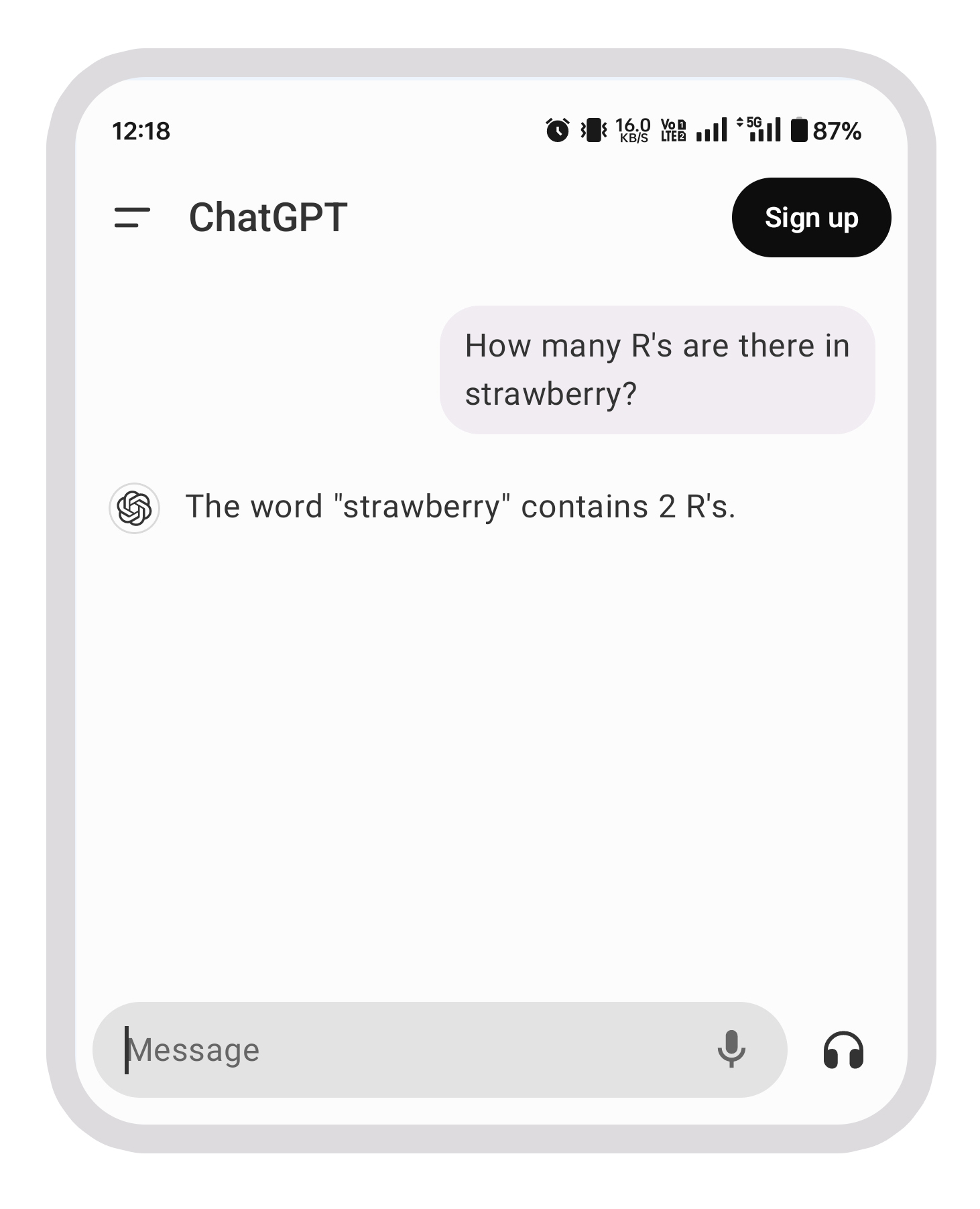

If you ask ChatGPT, like I just did, “How many R’s are there in ‘Strawberry’?” ChatGPT will promptly respond, “There are two ‘R’s in the word ‘Strawberry.'”

Is ChatGPT wrong?

We will come back to it but first…

We often hear that AI is revolutionizing fields like underwriting, providing insights that used to take weeks to gather. AI harnesses data from multiple sources and uses finely-tuned models like XGBoost, LightGBM, and CatBoost—industry favorites. These models have the potential to influence decision-making in ways that even the most talented actuaries find impressive. This is why they are being rapidly adopted and implemented across various use cases.

However, there’s a critical aspect we need to be mindful of: context. All AI models are highly dependent on context. The context provided—or assumed—can significantly influence the outcome.

So, as you implement these models, remember that the responsibility for the outcome still rests on your shoulders. At Empowered Margins, we empower AI implementers, like yourselves, with governance tools that not only help you manage AI models but also ensure that you apply common-sense context, helping you catch obvious details—like the “R”s in “strawberry.” For instance, one of the feature we call, rules engine, allows an underwriter to control which groups use specific AI models based on their risk profile

Now, back to the question: Is ChatGPT Wrong?

You understood the question, so you knew the answer. However, ChatGPT interpreted the question based on how it processes language. AI, like a smart person, tries to understand what you’re asking, but it sometimes predicts (guesses) wrong. In this case, the language model might have broken the word “strawberry” into smaller parts, or “tokens,” and counted the “R”s based on those tokens. It’s a bit like asking, “Do I look fat in this dress?”—the question isn’t just about the dress; it’s about seeking reassurance or validation. The listener might overthink it, considering emotional context or relationship dynamics, leading to a less straightforward response.

Similarly, when you ask ChatGPT how many “R”s are in “strawberry,” it might overthink the question. Instead of simply counting the letters, it infers hidden meaning or context from how the question is phrased. This can lead to errors, much like how someone might overanalyze the dress question and give a complicated answer.

So next time you eat a strawberry, remember that your AI can overthink.